FRU-Net: Robust Segmentation of Small Extracellular Vesicles

This web page supplements the paper entitled Deep-Learning-Based Segmentation of Small Extracellular Vesicles in Transmission Electron Microscopy Images. The paper provides a deep-learning-based image analysis pipeline built around a fully residual U-net architecture, thus being abbreviated as FRU-net.

Summary

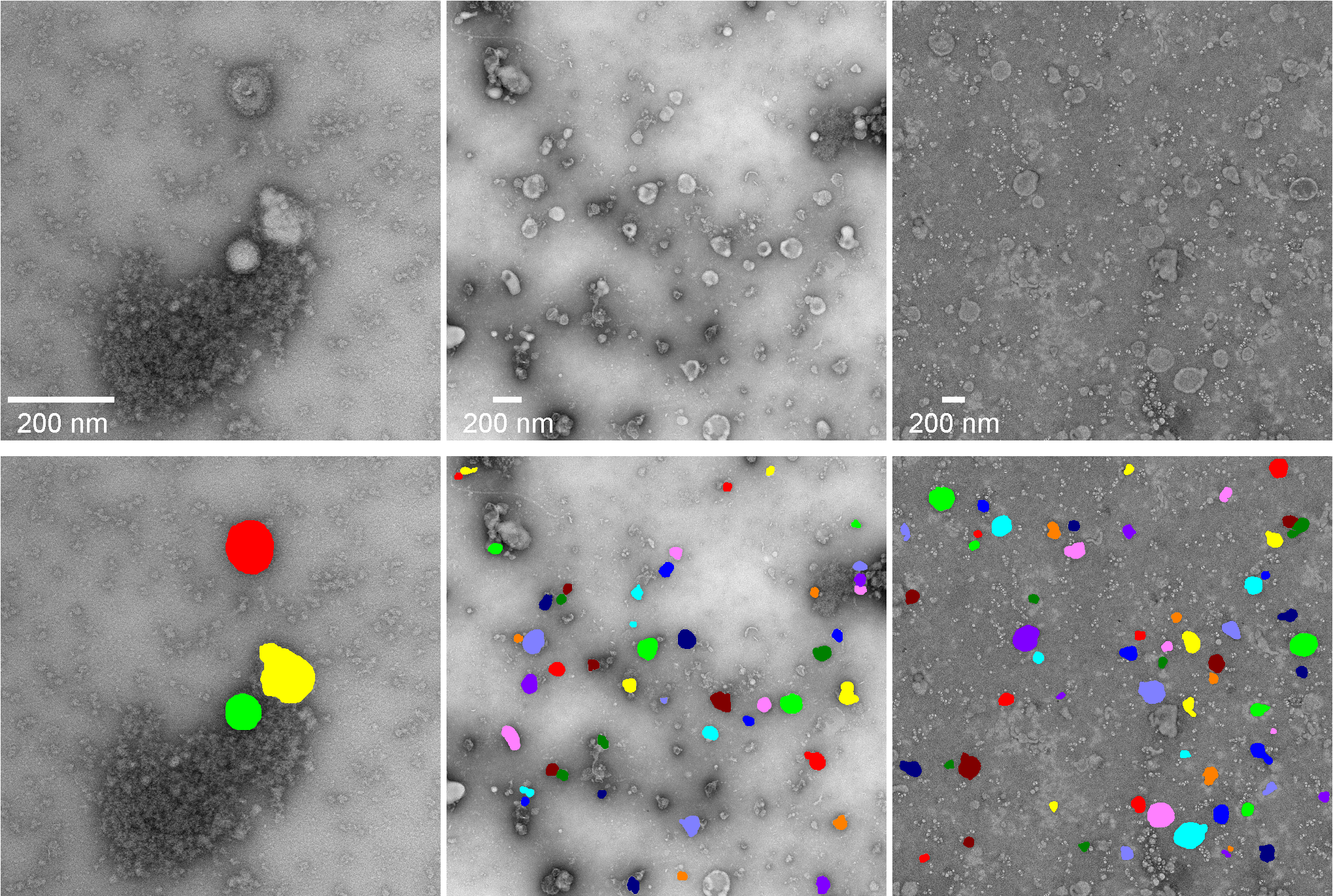

Small extracellular vesicles (sEVs) are cell-derived vesicles of nanoscale size (∼30-200 nm) that function as conveyors of information between cells. Their content reflects the cell of their origin and its physiological condition. Transmission electron microscopy (TEM) images can give valuable information on the shape and even on the composition of individual sEVs. Unfortunately, sample preparation for TEM imaging is a complex procedure. Frequently, TEM images are highly heterogeneous and contain artifacts, which renders automatic quantification of sEVs in TEM images an extremely difficult task. FRU-net is a deep-learning-based image analysis pipeline for robust segmentation of sEVs in TEM images, substantially outperforming TEM ExosomeAnalyzer in terms of detection and segmentation performance.

Preview of Image Data and Segmentation Results

Resources

The implementation of FRU-net in the form of a standalone Python module and the image datasets accompanied by their reference annotations can be downloaded here.

Conditions of Use

The resources provided are free of charge for noncommercial and research purposes. Their use for any other purpose is generally possible, but solely with the explicit permission of the authors. In case of any questions, please do not hesitate to contact us at estibaliz.gomez@uc3m.es and xmaska@fi.muni.cz.

In addition, you are expected to include adequate references whenever you present or publish results that are based on the resources provided.

References

- Estibaliz Gómez-de-Mariscal, Martin Maška, Anna Kotrbová, Vendula Pospíchalová, Pavel Matula, Arrate Muñoz-Barrutia. Deep-Learning-Based Segmentation of Small Extracellular Vesicles in Transmission Electron Microscopy Images. Scientific Reports, 9(1):13211, 2019.

Acknowledgment

This work was supported by the Czech Science Foundation under the grant number GA17-05048S and the Spanish Ministry of Economy and Competitiveness under the grants number TEC2013-48552-C2-1-R, TEC2015-73064-EXP, and TEC201678052-R.